In the last few years alone, user experience (UX) has quickly become the driving force behind nearly every form of technology that we interact with. Some might even go as far as to argue that in certain cases, the design of a product is even more important than its utility.

Whether it’s a voice user interface, wearable gadget, or otherwise, now more than ever, people are yearning for seamless, personalized experiences — and with the growing presence of agents embedded into the products we use every day, human-agent interaction (HAI) design will be even more critical going forward.

We want agent-based experiences that are intuitive and easy-to-use, enriching and facilitating our lives and routines — while remaining efficient, joyful, and sustainable, no matter how much the experience may mature over time.

And as agent-based interfaces continue to evolve, it’s only a matter of time until our inevitable shift from digital assistants to digital companion agents — context-aware, character-based agents that provide us with a truly personalized experience, tailored to each individual user.

We’ll soon move away from the utilitarian format, in which agents wait for our command; and opt instead for non-linear, contextual experiences, in which the agents interact with us both proactively and reactively.

Rather than limiting these agent-based experiences to one output modality (like speech or text alone), digital companion agents engage us in rich, dynamic experiences via multiple modalities — including speech, sound, movement, LEDs, and on-screen visuals — that sync together collectively.

Unlike designing experiences for an app or website, designing experiences on an agent is much more complex, as the agent must take its user and context into account for each potential interaction.

It also needs to know how to behave in the wild, throughout various unpredictable situations, making the design process all the more challenging.

At Intuition Robotics, we believe in fully democratizing the agent-based experience design and creation process — and with Q.Tools, we’ve provided HAI design teams with a means to do so, at scale, like never before.

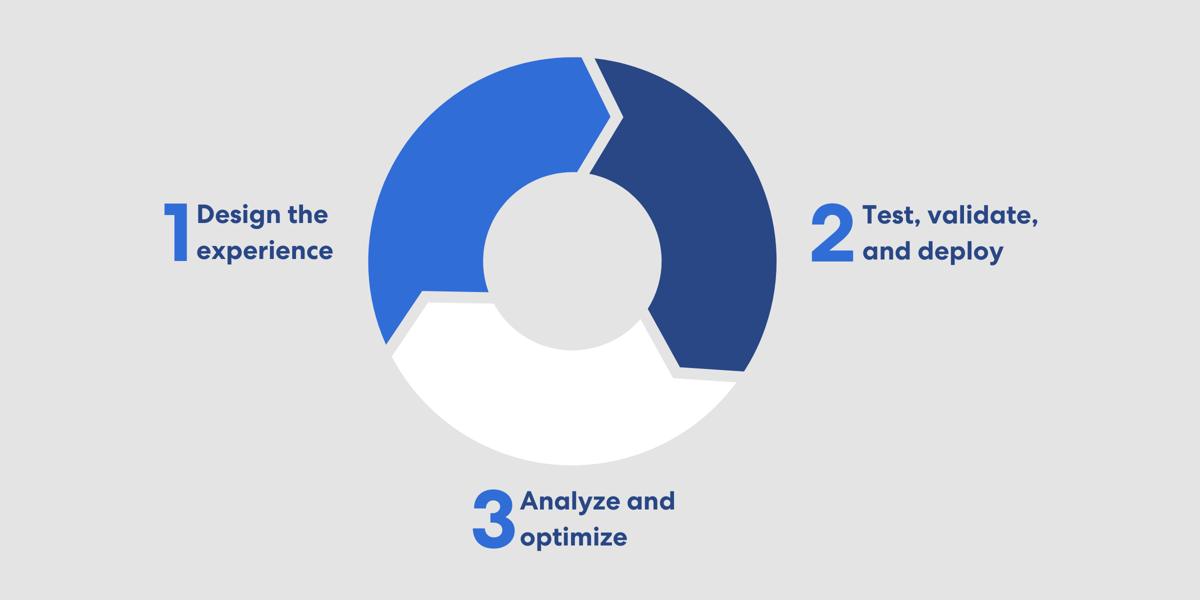

The experience creation life cycle

Behind every great agent-based experience is a team of creative powerhouses — experience designers, conversation designers, and visual designers, each extremely specialized in their craft. They understand people, and how to design the ideal human-centric experiences, with empathy in mind.

Yet while these experience design creatives are the ones orchestrating the experiences on the agent, they often must rely on outside resources to take their visions from conceptualization to reality — especially when it comes to deployment, testing, and analytics.

We believe that it’s time for a change. Non-technical individuals should be empowered to take their visions from A to Z on their own, without having to depend on technical resources or developers.

Different HAI teams should be able to produce a myriad of dynamic, personalized experiences in parallel, on a large quantity of agents in the field — in a way that’s as scalable and efficient as possible.

With Q.Tools, we’ve developed the "no-code" tools and methodology needed to do exactly this — including Q.Studio (our experience creation tool), our deployment tools, analytical tools, and much more.

And with Q.Tools at their fingertips, HAI teams can take full ownership of the experience creation life cycle on the agent, saving everyone some serious time and energy. This life cycle includes:

- Designing the experience. To start, HAI teams need to plan out the overall flow of the experience, and define the agent’s goals (i.e. to get the user to smile, try out a new feature, etc.). Then, taking situational context into account, they’ll begin mapping out and designing the multimodal behavior and creative assets (i.e. speech, sound, visuals, movement, and LEDs) for the agent to execute. In other words, they give the agent a set of instructions on how it should behave throughout each part of the experience.

- Testing, validating, and deploying the agent’s behavior. After everything has been planned, defined and designed, it’s time to start testing out and deploying the experience they created on a real agent. This way, they can see how the experience looks and feels, and make sure that the agent’s interaction decision-making logic is functioning properly — before it’s deployed on the agent with a real user, in the real world.

- Analyzing and optimizing. Once the human-agent interactions take place in the field, a multitude of interested parties (product managers, data teams, marketing, etc.) as well as the HAI designers can begin to analyze and investigate the data, and gain valuable insights from both high-level details (like overall user trends) and small details (like one specific interaction with a user). This way, they can learn what worked, and what didn’t, in order to alter certain aspects accordingly, and create personalized, optimal experiences for each individual user and situation — in a scalable manner.

We’ll be publishing a series of different posts that dive more deeply into each of these components in the near future, but for now, let’s focus on step one — the agent-based experience design process. This is an immensely challenging task on its own, involving numerous complex factors and variables.

Designing an experience is like writing a movie or play, with improv

The process of designing agent-based experiences is a lot more complicated than it might seem — it’s immensely creative, yet highly technical. Our team sees it as combining AI and analytics with the performing arts (directing and acting).

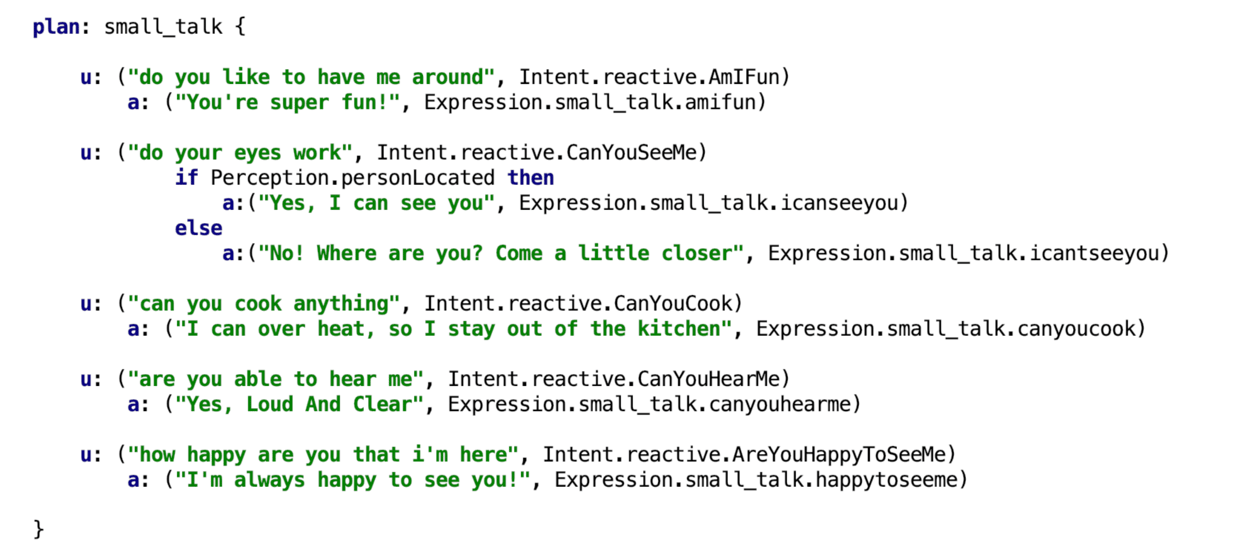

We like to give the analogy that it’s a lot like writing a script for a movie or play, with improvisational elements. The agent is like an improvisational “actor” throughout each “scene” — each interaction or exchange — participating in a multitude of scripted, carefully curated scenes, as well as unexpected improvisational scenes, with its user.

In this “script,” the experience designers (the “directors” behind the scenes) need to provide the agent (the “actor”) with a set of instructions and guidelines on how it should behave in a given situation — including dialogue, timing, and actions — based on the agent’s goals.

The HAI team needs to work together to plan out exactly how the agent should look, sound, and act throughout various situations with its user. The agent executes these instructions (“acts out” the scene) via the multimodal outputs mentioned above (dialogue, on-screen visuals, movement, music, and LEDs).

By this same notion, in addition to the “director,” other members of the HAI team take on similar roles in the performing arts industry throughout this process.

The conversation designer is like the “screenwriter,” the visual designer is like the “special effects designer,” the team member planning the sound and music is like the “sound designer,” the person in charge of LEDs is like the “lighting designer,” the movement designer is in charge of the “blocking,” and so on.

What makes this process even more challenging than writing, directing, and acting out a movie script is that after the “script” is finalized, HAI teams need need to consider a wide variety of potential intents and responses that the agent might encounter with its user.

This needs to be done in a way that’s scalable, yet highly personalized — factoring in the context behind the user’s situational environment, as well as who the user is (modeling of the user based on their prior interactions, preferences, etc.).

Obviously they can’t map out every single possibility, as that wouldn’t be scalable on a large quantity of agents in the field.

The agent executes these experiences in real life, with real people — hence where the improvisation component comes into play. Despite how much teams can attempt to plan for every possible outcome, as a “director,” you can only do so much.

You can try to control the agent's behavior, but you can’t really control how things will pan out when it’s interacting with users in a real environment — and something unexpected is almost always bound to occur.

Other components involved

In addition to writing out this “script,” and determining the agent’s behavior for any given situation with its user, there are a few other important elements involved in the agent-based experience design process.

For one, before they can hone in on the small details, UX teams should think about the big picture, and how to create a sustainable, lasting experience that keeps the human user engaged over time.

They need to have a clear understanding of the agent’s goals, and a strategy in place to accomplish them. For example, is its goal to get the user to try out a new feature, or to accomplish a certain task? Or, is it something more abstract, like getting them to laugh or smile?

Moreover, in order to design truly fantastic agent-based experiences, it’s essential to have a well-rounded team of experience design experts.

At the very least, each team should have a top-notch experience designer (again, the “director” planning the overall flow of each “scene”), a conversation designer (specialized in writing the agent’s speech and dialogue), and a visual designer (for on-screen visuals, animation, and LEDs).

Each team member is highly specialized in their craft, and plays a crucial role in the process, inserting their distinct piece of the puzzle into the final result.

And last but not least, each team needs to have a method in mind that will allow them to synergize together efficiently.

Since each team member is working on a different component of the overall experience, they’ll need to find the right balance between collaborating and communicating effectively with their team members, and working on their individual components of the experience.

Democratizing the future of agent-based UX with Q.Tools

.png?width=2880&name=Screen%20Shot%202020-06-08%20at%2019.18.18%20(1).png)

As our relationship and experiences with technology continue to evolve, human-centric design should be a driving force in the next generation of agent-based experiences.

Therefore, the creative individuals at the center of the HAI design process — the ones specialized in understanding people — should be able to fully support and control the entire experience creation life cycle on their own, rather than continuously relying on outside resources like data scientists or engineers.

From defining the interaction flow and the agent’s goals, to creating rich content via multiple modalities, to deployment, quick iterations, and analytics, Q.Tools empowers HAI teams to take ownership of the entire experience design process, creating effective, personalized experiences at scale like never before.

With Q.Tools, UX creatives can quickly take their vision from an idea to the “market” — the real-life user — so they can focus on maximizing the productivity and velocity of these agent-based experiences.

When experience design experts are the ones calling the shots and taking the agent-based experience into their own hands, the opportunities are endless.

As we look toward the shift from digital assistants to digital companion agents and the next generation of HAI, we’re excited about where intelligent agents will take us, and the possibilities that Q.Tools can provide throughout this exciting transition.

.png)