If there’s one phrase that we’ve heard countless times throughout this pandemic, it’s that “humans are social beings.” We absolutely are — and our innate ability to form bonds and make connections with those around us is an essential component of who we are. Yet today, perhaps the most prevalent, perpetual “relationship” within many of our lives — especially as we self-isolate at home — is not with other people, but rather, with technology.

We each have a unique bond with the tech in our lives, and we’re constantly interacting with different devices, agents, and interfaces throughout the entire day — from first thing in the morning, to the moment before we doze off to sleep. But relationships come in many shapes and forms, and just like our relationships with other humans or our pets, each type of technology that we interact with serves a unique role and purpose within our lives — whether it’s to entertain us, facilitate or streamline tasks, teach us new information, or otherwise.

Still, no matter how much our relationship with technology has changed over the years, it’s still a completely one-sided paradigm. We tell our devices what to do, and our demands are carried out — it’s as simple as that. What if instead, technology was able to create a new type of relationship and dynamic with us? Soon, as we transition toward a new era of proactive, context-aware digital companion agents, it will.

Of course, these agents will never fully replace our relationships with other people. But in order for them to truly add value to our lives, there are certain human-like aspects they’ll need to incorporate. In this post, we’ll explain how we can expect the human-agent dynamic to evolve, and why agents should rely on three components — gaining our trust, forming a bond with us, and maintaining our interest — in order to create successful interactions with us over time.

How our relationship with technology has evolved thus far

In the last few years alone, we’ve seen a major change in the way we interact with technology, including the rise of smarthomes (complete with Roombas, smart appliances, thermostats, and the like), and voice user interfaces, equipped with digital assistants (i.e. Alexa, Siri, and Google Assistant). In fact, as of 2019, about 3 billion digital voice assistants were used worldwide. By 2024, that’s expected to nearly triple to 8.4 billion users.

In the last few years alone, we’ve seen a major change in the way we interact with technology, including the rise of smarthomes (complete with Roombas, smart appliances, thermostats, and the like), and voice user interfaces, equipped with digital assistants (i.e. Alexa, Siri, and Google Assistant). In fact, as of 2019, about 3 billion digital voice assistants were used worldwide. By 2024, that’s expected to nearly triple to 8.4 billion users.

Yet despite how much has changed in terms of the type of technology we now use on a regular basis, digital assistants still create the same one-sided experience and dynamic that we’ve always endured — the agent waits around for our commands, and executes them accordingly. Soon, that’s all about to change.

Enter digital companion agents — the natural evolution of digital assistants, replacing utilitarian voice command with a new, bidirectional relationship based on empathy, trust, and anticipation of our individual needs and preferences. Rather than waiting on standby for our command, these agents will make proactive suggestions to pique our interest and positively influence our behaviors, routines, and emotions.

Instead of waiting around for us to interact, they intuitively know if, when, and how to engage with us in an optimal way. And just like a person would, they use situational context and the information they remember about us, to create meaningful, personalized interactions with us.

Looking ahead, these agents will soon be embedded into a variety of machines throughout our lives — from inside our kitchens and cars, to the ATM — each serving a unique role and purpose accordingly. But as with our human-human interactions, in order for human-agent interactions to flourish over time, the agent must establish and sustain 3 key factors: a relationship, our trust, and our interest.

Establishing the human-agent relationship

First and foremost, it’s important to recognize that the human-agent relationship will never be like a human-human relationship, and it’s by no means intended to replace our relationships with other people. Rather, agents are meant to augment and enhance our abilities, working as teammates and/or sidekicks alongside us.

And while agents do need to incorporate certain human-like components in order to effectively interact with us in a way that feels natural and seamless, there are many traits of ours that they can never fully possess or embody — making agent design a highly complex process. Before we get into how human-agent relationships can succeed, we first need to understand a bit more about relationships in general.

Why do we build relationships with other people? Typically, it’s because we get some sort of emotional or practical value out of our interactions with them. Either they can teach us new things, help us in our career, or make certain moments throughout our day more intriguing — you get the idea. With an agent, the same holds true — at the end of the day, we only interact with something if it helps us to achieve some sort of personal value or benefit.

Still, our relationships aren’t so easily categorized, and we each have many different types of relationships in our lives. For instance, our relationships with our romantic partners are dramatically different from our relationships with our family, bosses, colleagues, hairdressers, mechanics, and so on. Again, the same goes for agents — the relationship we’d form with an agent intended to help us in the kitchen every day would be completely different than the one we’d have with an agent embedded into an ATM, inside our cars, at a check-in desk, and so on.

So when it comes to an agent we’d interact with on a more regular basis, how can it establish an effective relationship that truly serves a purpose to us? Meaningful relationships don’t simply happen overnight — they need to build up over time. With other people, the relationship-building process typically involves the following 5 stages. For an agent, it’s more or less the same:

- Acquaintanceship — Becoming acquainted depends on previous relationships, physical proximity, first impressions, and a variety of other factors. If the two parties begin to like each other, continued interactions may lead to the next stage. If not, acquaintances can continue indefinitely.

- Buildup — During this stage, we begin to trust and care about each other. The need for intimacy, compatibility and filtering components such as common background and goals will influence whether or not the interaction together continues.

- Continuation — This stage follows a mutual commitment to a strong and close long-term friendship. It is generally a long, relatively stable period. Nevertheless, continued growth and development will occur during this stage, and mutual trust is important for sustaining the relationship.

- Deterioration — Not all relationships deteriorate, but plenty do with time. Boredom, resentment, and dissatisfaction may occur, and individuals may communicate less and avoid self-disclosure. Loss of trust and betrayals may take place as the downward spiral continues, eventually ending the relationship. (Alternately, the parties involved may find some way to resolve the problems and reestablish trust and belief in each other.)

- Ending — With other humans, the final stage marks the end of the relationship, either by breakups, death, or by spatial separation for quite some time and severing all existing ties together. With an agent, this translates to any reason for us to stop interacting with it altogether — it’s been discontinued, or we simply don’t find value in it anymore.

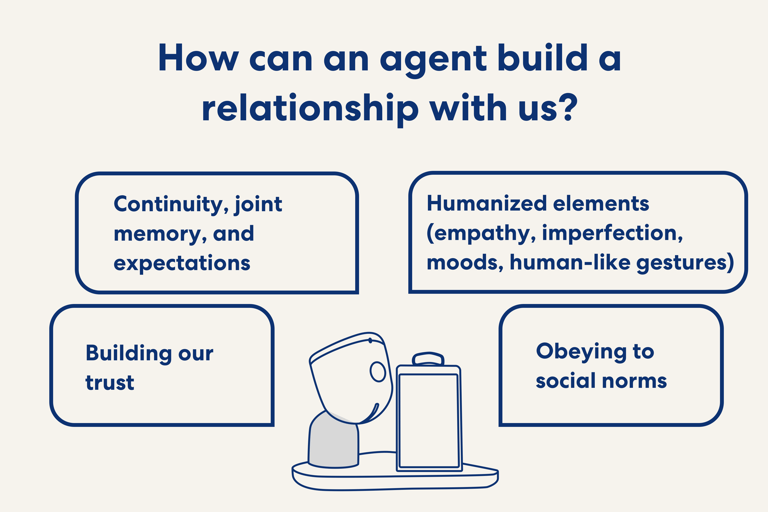

Additionally, the agent should incorporate the following characteristics — the same ones needed to build successful human-human relationships over time — but in a distinct way that indicates to us that we’re interacting with an agent, and not a human.

For example, though an agent can embody and evoke certain aspects of empathy, it cannot truly feel empathy, and that should be clear to us. Though it can incorporate certain human-like gestures and behaviors, it shouldn’t fully look and behave like a human — and so on.

Building our trust: Design principles the agent must embody

What exactly is trust? In terms of psychology and sociology, trust is a measure of our belief in the honesty, fairness, integrity, or benevolence of another party. And just as our relationships aren’t built in a day, developing our trust is an ongoing, gradual process.

It can take some serious time and effort for us to feel comfortable opening up and trusting anyone — be it other humans, animals, or otherwise — thus making it all the more difficult for an agent to establish our trust. It’s also very easy for others to break our trust — by being dishonest, misleading, not keeping promises, or otherwise.

Obviously agents lack the ability to fully trust us — but they should make us feel that we are able to trust them. How can they build our trust? Again, it’s rather complicated, but it is possible, so long as the agent incorporates the right components, including certain empathetic design principles (explained further in this blog), as well as the following:

- Transparency and expectations — This is perhaps the most important of all. In order to gain our trust, the agent must set clear expectations with us regarding its functionality, and what it can and can’t do. It should also show vulnerability, and take full responsibility for its mistakes.

- Mutuality and reciprocity — By asking us open-ended questions, learning about our interests, and asking for our feedback, the agent helps us feel comfortable opening up and sharing things with it. Ultimately, this also paves the way for working towards a common goal together, which can help deepen our level of trust even further.

- Demonstrating its value — As with relationships, the agent needs to show us that using it is worthwhile, and that we can in fact derive benefit from interacting with it over time.

- Consistency and predictability — When we interact with the agent, we want to know what to expect, and that, in some sense, it will behave and perform in the way we expect it to. This ties back in with setting clear expectations with us.

- Privacy — The agent should make us feel safe and secure, and that there are no privacy concerns regarding our data, or us sharing personal information about ourselves with it.

In building our trust, it’s also very important to note that the agent must be very careful about its wording, behavior, and how it expresses itself. The designers creating interactions with the agent need to thoroughly consider how the agent could potentially break our trust — and how it should act, move, sound, and phrase its questions accordingly in order to avoid this.

There’s also a noteworthy correlation between trust and the brand behind the agent. Think about it — we all have certain expectations when it comes to brands. There are established, well-known brands that we’ve come to trust overtime (after using them for years), and new, smaller brands that we don’t feel as comfortable with.

As such, when we’re engaging with an agent that’s powered by a well-known brand, we might have higher expectations of it — and if it doesn’t perform accordingly, this can easily break our trust. On the contrary, with an agent by a less-known brand (such as ElliQ), though it might take awhile for it to build our trust, we’d likely be more forgiving when the agent makes mistakes.

Keeping us interested and motivated to interact

Relationships come and go at different points in our lives — depending on our circumstances, and whether or not the relationship is serving our needs. Just as we have certain motivation to interact with other people, our desire to interact with the agent could be that it provides us with: utility, fun, mood enhancement, personal gain, convenience, or something else.

Thus, in order for us to continue pursuing those interactions together in the long-run, there needs to be some sort of personal gain involved — a give and take. We need to feel that they’re getting something out of the dynamic in order to continue with it. If not, these interactions will naturally fizzle out over time.

For example, when interacting with other people, imagine a friend that no longer responds to your calls or messages. Eventually you’d realize that they’re not worth the effort — since your effort to contact them is not reciprocated with any benefit — so over time, you’d lose interest, and thus, cease all future attempts to interact with them.

With the agent, it’s the same. In order for our interactions with the agent to be successful over time, the agent must hook us in initially, then periodically demonstrate the value we can derive from it. If the agent is no longer able to demonstrate its value, motivate us to interact with it, or maintain an advantageous purpose in our lives, we’ll eventually lose interest in it.

Challenges ahead

As mentioned throughout this article, human-agent relationships and dynamics are extremely complex — so naturally, the design process comes with its fair share of challenges. For now, it’s still very difficult for the agent to know and measure how it’s doing, and whether or not it has in fact been successful in establishing and sustaining a relationship with us.

How do you quantify and measure how strong the relationship is? How do you know what phase it’s in, or if it’s improving or worsening? Yes, there are facial recognition sensors, but it’s oftentimes hard to interpret them, especially when we’re alone (and less prone to make facial expressions). There are also outliers — facial expressions aren’t exactly universal, and some people might appear to be experiencing one emotion, when in reality, it’s something else.

Additionally, it’s tough to assess the level of honesty and integrity coming from the human user’s end. Some people might be dishonest to their agent, and the agent has no way of knowing that. For example, when ElliQ asks a user if they took their medication, the user could respond with “Yes, ElliQ, I took my medicine,” — when in reality they did not, and the agent has no way of assessing whether its users’ statements are accurate.

Moving forward: The future human-agent relationship

Looking ahead, the human-agent relationship as we know it will continue to progress and evolve tremendously — and agents will begin to play a budding role in each of our daily lives. But just as our relationships with other people are convoluted and not always black-and-white, human-agent dynamics are just as complicated — if not, even more so.

In order for human-agent interactions to truly thrive in a sustainable way, digital companion agents must be able to establish our trust, form meaningful connections with us, maintain our interest, and motivate us to interact with them over time. For all of this to be possible, there must be a combination of advanced AI and decision-making technology, and effective, carefully-considered interaction design — making it a complex, yet exciting challenge.

For now, our team continues to research and explore this fascinating topic with our own digital companion agents — ElliQ and Q for Automotive — powered by Q, our cognitive AI engine. These agents engage with users via a myriad of interactive experiences, designed by our team of interaction designers. We look forward to witnessing the next generation of human-agent relationships continue to unfold, and gaining a deeper understanding of the vast potential these agents can have on our future society.

.png)